The Accidental Success of ChatGPT

The success of ChatGPT was a surprise to even OpenAI and revolutionized the field of AI, taking us from language models to automated language agents.

ChatGPT was never meant to be the product that brought AI to the global market. It was built as a simple tech demo, where individuals in and around the tech space could come interact with the latest and greatest in language modelling, a way for OpenAI to flex their research muscles and wow the world with their shiny new toy. The expected outcome for OpenAI was something more similar to their Dalle-2 demo, a simple UI that could showcase the reasoning capabilities of AI models and create some more interest in the field and maybe earn some investment dollars for further research. But as we know now, ChatGPT became the most popular AI invention to date, with a popularity that quickly got out of hand. Almost everyone with access to a computer or a phone has now interacted with ChatGPT, far exceeding OpenAI’s humble tech demo aspirations, warping it into something more akin to a true product instead. ChatGPT has now generated near infinite talk about how AI will affect all sectors of our economy, and by consequence, ChatGPT’s unintended exposure with the world has altered the fabric of AI research forever.

A New Task

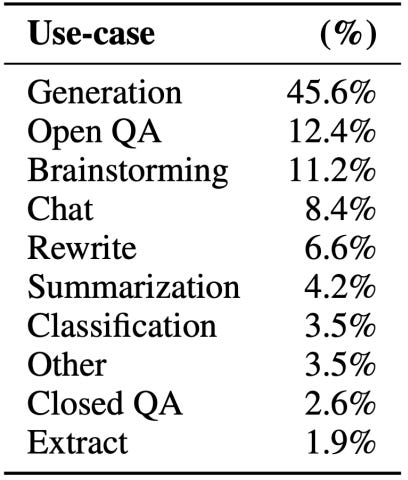

The most interesting outcome of ChatGPT’s unexpected exposure was the new set of tasks that language models were being asked to do. While typical AI researchers were asking models like ChatGPT to perform tasks like translation or classification, non-technical users were asking ChatGPT to generate code, summarize articles, hold a conversation, and answer questions. These non-technical users had created a whole slew of novel tasks which ChatGPT had not been designed to handle, and while the language model behind ChatGPT was good at some of these tasks, many questions asked ChatGPT to do something that was far outside of its capabilities. Some of these tasks were outlandish and unreasonable, with ChatGPT understandably failing to write legal arguments and perform customer service, causing massive headaches for untrained users. But these headaches taught AI researchers something major, it showed them how the public wanted to use AI within their day to day life.

This set on non-technical users unintentionally brought about a rapid change in the field of Natural Language Processing (NLP) and forever altering the path of AI research. This whole time researchers had thought of technologies such as ChatGPT as models, statistical beings who try to estimate our world, but few end-users viewed ChatGPT as a model and so they refused to interact with it as such. Because of ChatGPT’s ability to pass a non-standard Turing test, people wanted to use this technology to tackle tasks far outside the scope of pure language and traditional NLP. The public wanted agents, a technology which could reason, research, and act in an automated fashion. A revelation which created a cascade of research and a whole host of novel research fields within AI. Papers investigating intelligent prompting, context retrieval, and agent-environment interactions sprung up all around the AI space, augmenting traditional NLP architecture and dataset research initiatives. All of a sudden language modelling went from being “the technology” to being just a mere component of a language agent which was empowered to do so much more. This change in overall goal has required researchers to build new benchmarks for evaluating language agents agents and new conceptual frameworks for efficiently and effectively constructing these agents. We have even seen initiatives to make prompting “optimizable” and “trainable”, removing the need for hand-crafted and hand-tuned agent prompts, allowing for automated prompt optimization in code. All of this novel research has been created to keep up with the rapid increase of AI demand as we try to make AI ready for deployment. A deployment date which came much quicker than anyone at OpenAI or within the field of AI research had anticipated.

And this is where we are today, building the AI plane in flight. We are witnessing language agents become deployed in live environments while researchers are still trying to understand this new language agent paradigm. How do we make sure that these language agents and the decisions that they make are safe? How do we make sure that these language agents don’t violate security protocols? How do we make sure that these language agents don’t hallucinate and provide misinformation? These are all questions that we don’t have definitive answers on at the moment. The lack of answers to these questions are causing serious growing pains as the field of AI stumbles through its infancy. Stories such as Air Canada’s AI customer service agents offering unauthorized discounts show some of the clear limitations of AI and why the current hype may have outstripped the capabilities. However, new technologies and techniques that can solve these problems are rapidly being developed thanks to more money for AI research than ever before. And it is highly possible that all of these problems are solved on a timeline much faster than anyone could expect. In short, we are at a key inflection point in the story of AI as companies make a mad dash to incorporate the new technology while researchers try to adapt language modelling into something no one anticipated it would be just a few short years ago. This adaptation has already shown to be a painful one but given time it seems that AI may prove to be the key revolution of the 21st century.

Final Thoughts

While it may sound as though I am pessimistic, I am truly excited and curious to see what the field of AI research will come up with to solve these problems and truly bring AI to market. We are learning more and more each day about how these language agents are failing and with each failure we continue to improve upon their performance. It is truly one of the most exciting times for AI research and AI discussion with thousands of new papers each day that push the field forward. While I do have my reservations about the speed at which AI will bring value to the market, there is no question that AI will change the way we interact with the world. I can’t wait to see how the field is going to grow and change over the next 5 years. And to think, all of this frenzy started with the unexpected success of a language modelling tech demo.

If you would like to know more about some of the technologies that are helping transform language models into language agents I have attached a few research papers at the end of this blog post (Warning: these can be dense if you have not had experience reading AI research before). They cover topics such as prompting, RAG, benchmarking, and even conceptual design of language modelling agents. There are so many papers that are relevant to this field of study so treat these are a starting block if you truly want to understand this subculture in the realm of AI.

Additionally I have plans to make a post on using Python libraries to create a programmable RAG (Retrieval Augmented Generation) agent for document queries. This kind of post will be geared towards programmers and act as a walk-through on how to create a RAG agent. If this is something that interests you consider following me as I plan to post this demo within the next 2–3 weeks. If you are not a programmer but have some familiarity with Python you may still be able to download the code and play around with the RAG agent. All code from this endeavor will be free and open-source.

For those of you who would like more high level discussions of AI like this post I do have some stories about AI Transparency and AI Hallucinations that mimic the tone used in this post. While most of my posts tend to be geared towards programmers and academics, I do try to make all of the information accessible to a non-technical audience so feel free to check out other posts I have made as well as some of my upcoming posts:

Practical Programmable RAG Parts 1 and 2

Creating Mixture of Experts From Scratch

How to Steal an LLM

If any of these topics seem interesting to you consider following me on Medium or here on my Substack (I post the same content to both platforms for free). I post twice a month on Mondays, covering topics such as AI research papers, AI philosophy, and AI programming projects.

Recommended Papers

ReAct: Using prompting to enable LMs to reason and act.

Chain-of-Thought: Using prompting to make LMs use intermediate reasoning steps for response generation.

Tree-of-Thought: Providing tree structure to LM reasoning.

Retrieval Augmented Generation: Gathering relevant documents as a method for hallucination minimization.

CoALA: Proposing a new language agent framework for future agent design.

MLAgentBench: A new benchmark for LM agent performance.

OpenAgents: A platform for using and hosting language agents.